File Copy Performance for Large Files 3

The last few days, I’ve been trying to improve the manner that I copy large (2+GB) files around both locally and between systems.

I looked at 4 different programs and captured very simple stats using the time command. The programs tested were:

- cp

- scp

- rsync

- bigsync

I’d considered trying other programs like BigSync, but really wanted something at supported incremental backups to the same file and handled it without too much complexity. I would have liked to use zsync, but my understanding is that is an HTTP protocol and can’t be used for local copies. I wasn’t interested in setting up apache with SSL between internal servers.

For network copies, some form of SSL encryption was used. That could be ssh tunnels, rsync of ssh, sshfs or simply scp. The OpenSSL libraries were not patched for higher performance.

Performance Testing is Hard

The results appear to be all over the place. This means I have at least 1 major flaw in my testing methods. I didn’t reboot the systems between test scenarios. I didn’t flush the cache, nor did I stop all other programs running. Basically, the systems involved were doing what they do all the time … running. I did avoid high CPU, IO and bandwidth tasks and I performed the tests during off-peak periods.

Anyway, here are the results.

Results

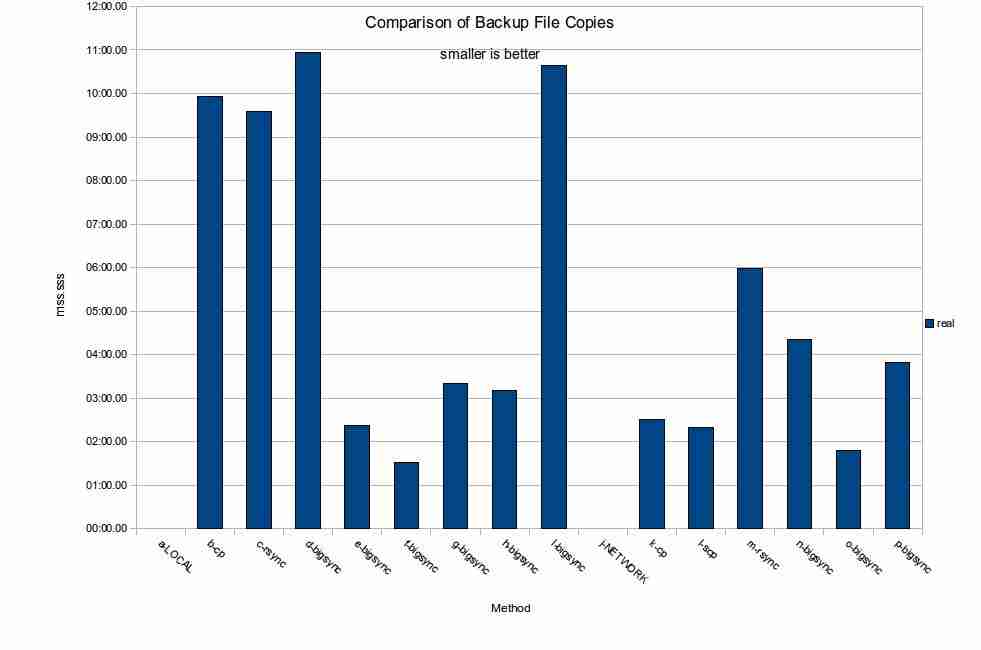

14 different test runs were performed. To understand the details, both the order of the tests and the program used need to be considered.

The table below provides more information about each scenario. They are in order. The left group of copies were performed within a single machine across file systems on different disks. The second group of copies were over a GigE network which routinely sees 650Mbps throughput. The disk IO subsystems and CPU required due to the program used were more important. Also, the use of the FUSE sshfs could have impacted performance.

To see the image full-size, right-click and choose View Image.

A table showing the details for each scenario is available.

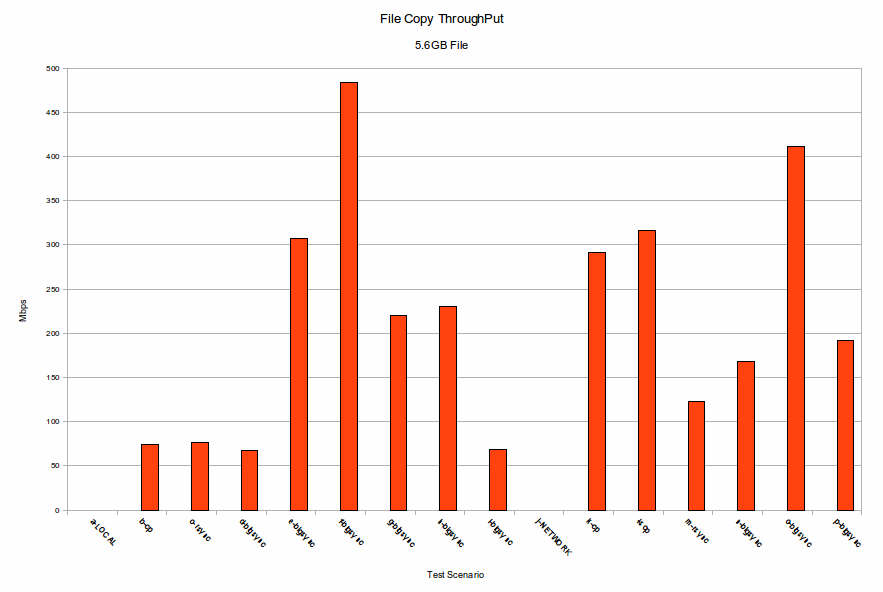

Network Throughput Chart

Below is a graph that shows the bandwidth for each scenario. As expected, disk throughput was the limiting factor, not network throughput. Disks are faster than networks for small files, not big ones where the disk performance is king. About 70Mbps is the throughput seen with cp, scp, and rsync for the first copy (before any differencing can be performed). As expected, higher perceived throughput is achieved with differential copies, but not as high as I would have thought. bigsync provided the highest effective throughput with the 3rd run at about 580 Mbps.

bigsync runs showed some interesting trends. The first run, before the target file exists, is slightly slower than cp or scp. The 2nd run was at least 100% faster and sometimes almost 500% faster. The 3rd run was another 50% faster than the 2nd run, but that was almost certainly due to disk caches.

The bigsync process leaves a file in the target directory which contains SHA-1 signatures for each block. Also, if the target arguement does not specify a filename, then the source file name is not utilized. This seems like a poor design choice. The C code is simple enough to make that modification an easy change.

The use of FUSE sshfs probably slowed things down slightly, but as network performance didn’t seem the be the bottle neck anywhere in these tests as shown by internal system disk-to-disk copy performance, I don’t plan to perform any additional network tuning.

What Does This Mean?

The data doesn’t show any trends and definitely didn’t show what I’d expected. This means the tests were flawed … or that it doesn’t really matter which of the methods are used.

However, the data did confirm a few things that I already knew.

- rsync isn’t efficient with large files, we are better off copying the files without rsync

- I expected the first bigsync to be similar to a copy or scp in time. It was.

- I expected non-first bigsyncs to be much quicker, especially for an unchanged file. The bigsync code is not very complex.

- I learned that bigsync had issues accessing VirtualBox shared file systems from a hostOS. It never worked. When I reviewed the code, I couldn’t see any complex file system calls and have concluded it is a bug in VirtualBox based on other strange behaviors and poor performance that I’ve seen VirtualBox host-shared files display.

I’m still looking for a solution and I need to work on my test procedures.

Trackbacks

Use the following link to trackback from your own site:

https://blog.jdpfu.com/trackbacks?article_id=833

So I started doing a little more research and came across GridFTP and HPN-ssh along with TCP-Tuning suggestions.

HPN-SSH would be more interesting if it were available in the repositories. It appears to be a patch-only that needs to be applied to the openssh source code at this time. Unacceptable for my use.

GridFTP is promising – it supports older FTP server, can restart, and supports encrypted transfers via x.509 certs

If security were not mandatory, there appears to be a netcat trick that improves performance. Also, since openssl uses internal buffers, TCP tuning in the /etc/sysctl.conf doesn’t help.

Based on this research, I’m looking for

After tuning the TCP settings on both the client and server side, the bigsync network copy returned:

real 4m41.968s

user 1m31.520s

sys 0m15.360s

2nd run:

real 2m17.865s

user 1m31.820s

sys 0m4.090s

3rd run:

real 1m32.036s

user 1m29.380s

sys 0m2.520s

4th run:

real 1m31.482s

user 1m30.040s

sys 0m1.350s

These were all performed back-to-back.

Since these tests show disk performance, not network, is the bottleneck, we need to understand the capabilities of each file system involve in these tests.

The Linux Journal covered disk performance testing with fio a few years ago. Some test scenario setup is mandatory before running a test. The tool appears to have as much or as little configuration as you like. There appear to be 3 mandatory settings required before you can begin and a test configuration file is needed. That’s good, since we will want to run the identical tests, on multiple disks, on multiple computers. All sorts of settings can be tuned to help determine the best performance settings.

Of course, they also mention using hdparm -T to get a baseline of performance without a file system, but we all use file systems, so those tests are less than useful for the real world. I think of them as the maximum possible before the file system overhead is added.

Anyway, having reproducible disk performance tests is fantastic, once the setup is done.