Ubuntu Hardy Depots Missing?

Err http://ppa.launchpad.net hardy/main Packages 404 Not Found Err http://ppa.launchpad.net hardy/universe Packages 404 Not Found W: Failed to fetch http://ppa.launchpad.net/madman2k/ubuntu/dists/hardy/main/binary-i386/Packages.gz 404 Not Found W: Failed to fetch http://ppa.launchpad.net/madman2k/ubuntu/dists/hardy/universe/binary-i386/Packages.gz 404 Not Found E: Some index files failed to download, they have been ignored, or old ones used instead.

Ouch.

I knew hardy support would eventually go away, but not before the next LTS release which isn’t scheduled for 4 months.

Fixed, 3 Days later ….

Virtualization Survey, an Overview 1

Sadly, the answer to which virtualization is best for Linux isn’t an easy one to answer. There are many different factors that go into the answer. While I cannot answer the question, since your needs and mine are different, I can provide a little background on what I chose and why. We won’t discuss why you should be running virtualization or which specific OSes to run. You already know why.

Key things that go into my answer

- I’m not new to UNIX. I’ve been using UNIX since 1992.

- I don’t need a GUI. Actually, I don’t want a GUI and the overhead that it demands.

- I would prefer to pay for support, when I need it, but not be forced to pay to do things we all need to accomplish – backups for example.

- My client OSes won’t be Windows. They will probably be the same OS as the hypervisor hosting them. There are some efficiencies in doing this like reduced virtualization overhead.

- I try to avoid Microsoft solutions. They often come with additional requirements that, in turn, come with more requirements. Soon, you’re running MS-ActiveDirectory, MS-Sharepoint, MS-SQL, and lots of MS-Windows Servers. With that come the MS-CALs. No thanks.

- We’re running servers, not desktops. Virtualization for desktops implies some other needs (sound, graphics acceleration, USB).

- Finally, we’ll be using Intel Core 2 Duo or better CPUs. They will have VT-x support enabled and 8GB+ of RAM. AMD makes fine CPUs too, but during our recent upgrade cycle, Intel had the better price/performance ratio.

Major Virtualization Choices

- VMware ESXi 4 (don’t bother with 3.x at this point)

- Sun VirtualBox

- KVM as provided by RedHat or Ubuntu

- Xen as provided by Ubuntu

I currently run all of these except KVM, so I think I can say which I prefer and which is proven.

ESXi 4.x

I run this on a test server just to gain knowledge. I’ve considered becoming VMware Certified and may still get certified, which is really odd. I don’t believe many mainstream certifications mean much, except CISSP, VMware, Oracle DBA and Cisco. I dislike that VMware has disabled things that used to work in prior versions to encourage full ESX deployments over the free ESXi. Backups at the hypervisor level, for example. I’ve been using some version of VMware for about 5 years.

A negative, VMware can be picky about which hardware it will support. Always check the approved hardware list. Almost every desktop motherboard will not have a supported network card and may not like the disk controller, so spending another $30-$200 on networking will be necessary.

ESXi is rock solid. No crashes, ever. There are many very large customers running thousands of VMware ESX server hosts.

Sun VirtualBox

I run this on my laptop because it is the easiest hypervisor to use. Also, since this works on desktops, it includes USB pass thru capabilities. That’s a good thing, except, it is also the least stable hypervisor that I use. That system locks up about once a month for no apparent reason. That is unacceptable for a server under any conditions. The host OS is Windows7 x64, so that could be the stability issue. I do not play on this Windows7 machine. The host OS is almost exclusively used as a platform for running VirtualBox and very little else.

Until VirtualBox gains stability, it isn’t suitable for use on servers, IMHO.

Xen (Ubuntu patches)

I run this on 2 servers each running about 6 client Linux systems. During system updates, another 6 systems can be spawned as part of the backout plan or for testing new versions of stuff. I built the systems over the last few years using carefully selected name brand parts. I don’t use HVM mode, so each VM runs with 97% of native hardware performance by running the same kernel.

There are downsides to Xen.

- Whenever the Xen kernel gets updated, this is a big deal, requiring the hypervisor be rebooted. In fact, I’ve had to reboot the hypervisor 3 times after a single kernel update before it takes in all the clients. Now I plan for that.

- Kernel modules have to be manually copied into each VM, which isn’t a big deal, but does have to be done.

- I don’t use a GUI, that’s my preference. If you aren’t experienced with UNIX, you’ll want to find a GUI to help create, configure and manage Xen infrastructure. I have a few scripts – vm_create, kernel_update, and lots of chained backup scripts to get the work done.

- You’ll need to roll your own backup method. There are many, many, many, many options. If you’re having trouble determining which hypervisor to use, you don’t have a chance to determine the best backup method. I’ve discussed backup options extensively on this blog.

- No USB pass thru, that I’m aware. Do you know something different?

I’ve only had 1 crash after a kernel update with Xen and that was over 8 months ago. I can’t rule out cockpit error.

Xen is what Amazon EC2 uses. They have millions of VMs. Now, that’s what I call scalability. This knowledge weighed heavily on my decision.

KVM

I don’t know much about KVM. I do know that both RedHat and Ubuntu are migrating to KVM as the default virtualization hypervisor in their servers since the KVM code was added to the Linux kernel. Conanacal’s 10.04 LTS release will also include an API 100% compatible with Amazon’s EC2 API, binary compatible VM images, and VM cluster management. If I were deploying new servers today, I’d at least try the beta 9.10 Server and these capabilities. Since we run production servers on Xen, until KVM and the specific version of Ubuntu required are supported by those apps, I don’t see us migrating.

Did I miss any important concerns?

It is unlikely that your key things match mine. Let me know in the comments.

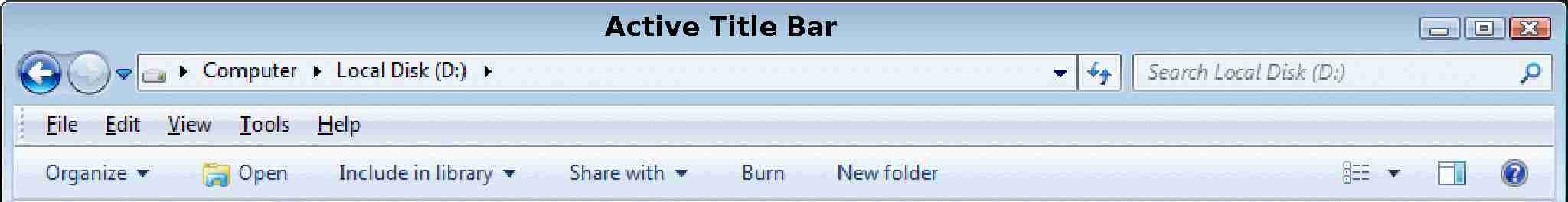

Solved - Change Windows7 Window Border Thickness and X-Mouse

Windows7 is an improvement over other versions in many ways, except they decided to waste too much screen with pretty and thick boarders by default. Additionally, the window title bar seems to be 2x larger than under XP. No thanks.

It has bothered me for a few months, but not enough to search and try a few things until today. Even with the changes, the window borders and title bar are still too think for my tastes, but at least it is a little better.

The settings can be found in the Window Color and Appearance settings of Windows7. The Items to change are:

- Active Title Bar – size 17 is the smallest it will accept

- Border Padding – size 0 is the smallest setting

- Active Window Border – size 1 is the smallest

You can also make scroll bars thinner, if you like. Initially, I went too thin and had to make them a little larger so usability wasn’t completely lost. Here’s the resulting border. Sadly, there is still way too much wasted space in my opinion.

I have no use for the part of the window with the Organize or Include in Library stuff. That entire menu is worthless to me. Let me know if you know how to remove that section. Please.

If you miss X-Mouse from Tweak-UI in the PowerToys, here’s a solution to have the focus follow the mouse. I like option 3 and have pulled the regedit file down. Unix people will appreciate this. It is nice to have the active window not necessarily pulled to the foreground just because it is active.

Ubuntu 10.04 Photo Management - Looking Ahead

You may not have heard that the Ubuntu guys are planning to remove The Gimp from the default desktop installs in the next LTS release of Ubuntu. Good. The Gimp is very capable, but I’ve never found a use for it. Never. It is too complex for my rotate, crop, remove red-eye needs.

There are a few excellent options, but it seems most of them have issues for me. I like a lite desktop – no Gnome, no KDE, so anything that requires those libraries is to be avoided. The only think worse is to include Mono. Mono is an FOSS implementation of Microsoft’s .NET libraries.

I generally deal with photos very little, unless I’m using scripts to attach GPS lat/lon to the EXIF data in the files or rotate them. Recently, I installed digiKam, a KDE app, but only because it made attaching GPS EXIF data easier. I avoid using it.

Well, I came across an article concerning 3 Gimp replacements that got me thinking. That link was really, really slow for me too. Of the choices, only 1, f-spot, is in the default repositories for my LTS distribution. For fun, I did an install, here’s the dependency data.

$ sudo apt-get install f-spot

Reading package lists… Done

Building dependency tree

Reading state information… Done

The following extra packages will be installed:

cli-common libart2.0-cil libflickrnet2.1.5-cil libgconf2.0-cil

libglade2.0-cil libglib2.0-cil libgnome-vfs2.0-cil libgnome2.0-cil

libgtk2.0-cil libgtkhtml3.14-19 libgtkhtml3.16-cil libmono-addins-gui0.2-cil

libmono-addins0.2-cil libmono-cairo1.0-cil libmono-cairo2.0-cil

libmono-corlib1.0-cil libmono-corlib2.0-cil libmono-data-tds1.0-cil

libmono-data-tds2.0-cil libmono-security1.0-cil libmono-security2.0-cil

libmono-sharpzip0.84-cil libmono-sharpzip2.84-cil libmono-sqlite2.0-cil

libmono-system-data1.0-cil libmono-system-data2.0-cil

libmono-system-web1.0-cil libmono-system-web2.0-cil libmono-system1.0-cil

libmono-system2.0-cil libmono0 libmono1.0-cil libmono2.0-cil

libndesk-dbus-glib1.0-cil libndesk-dbus1.0-cil mono-common mono-gac mono-jit

mono-runtime sqlite

Suggested packages:

monodoc-gtk2.0-manual libgtkhtml3.14-dbg libmono-winforms2.0-cil libgdiplus

libmono-winforms1.0-cil sqlite-doc

Recommended packages:

dcraw libmono-i18n1.0-cil libmono-i18n2.0-cil

WOW! That’s a bunch of crap to be forced to load for 1 app that I’ll use perhaps once a month. No thanks. Further, the last package, sqlite, is concerning, since I use the sqlite3 package all the time. Forcing an older package – boo.

I hope the Ubuntu guys consider bloat, which is what they are trying to get away from by not including The Gimp after all. Some people like iTunes and others like the original WinAMP. I’m in the later group. Keep it simple, please.

SysUsage 3.0 Installation Steps 1

We’ve been using SysUsage to monitor general performance of our Linux servers for a few years. Version 3 was released recently with a new web GUI and simpler installation, but not quite the trivial apt-get install that we’d all love. View a demo.

Anyway, go grab a copy of the source tgz and follow along.

tar zxvf SysUsage-Sar-3.0.tar.gz cd Sys*0 sudo apt-get install sysstat rrdtool librrds-perl perl Makefile.PL make sudo make install sudo crontab -e

Drop these lines into the root crontab.

*/1 * * * * /usr/local/sysusage/bin/sysusage > /dev/null 2>&1

*/5 * * * * /usr/local/sysusage/bin/sysusagegraph > /dev/null 2>&1

I performed these steps using Cluster SSH on almost all our Ubuntu 8.04.x servers; each installation worked.

If you have Apache running in the normal place, browse over to http://localhost/sysusage/ ,

If you don’t run a web server, try firefox /var/www/htdocs/sysusage/index.html to see the results.

Further, our simple rsync over ssh scripts to pull the SysUsage output back to a central performance server are still working. Some of the old data from the v2.12 of the program is still inside the RRD files. It isn’t clear at this point whether the data will be used in the new graphs or not. It takes about a day for the graphs to become useful.

Trivial Lifehacker Profile Monitoring Script

Lifehacker is a site that many of us watch daily for tips. If you join the community, you may find that the LH site can sometimes become … er … slow. The cause of this can be many things, but personally, I think they’ve gone overboard with all the javascript.

Someone mentioned they had written a real-time notification script to tell him about relies to his posts and he asked others what they would like of the script. I started thinking and determined it would be a fairly trivial script to do what I wanted – email a list of replies in simple HTML.

As with all scripts, they are never really done and prone to tweaks for the next year. I think the next tweak will be to try the feed.xml that LH provides. Perhaps it will be smaller, faster and easier to parse?

So I attempted to attach the script to this article using tools build into the blogging system. Both have failed.

- Upload – so RSS feed readers see an attachment link – no joy

- Excerpt – I’ve used this in previous versions of the blog successfully. No more. The issue is probably due to my theme.

Anyway, here’s the code

#!/usr/bin/perl

- #####################################################

- Display Lifehacker Profile data

- Recent Replies (first pg only)

- Followers

- Friends

#- Known Linux Dependencies:

- - perl and LWP and Getopt modules

- - sudo cpan -i LWP::Simple

#- Installation

- 1) Change the Your-LH-Profile-name-Here below to yours

- 2) chmod +x lh_profile_monitor.cgi

- 3a) Either run as is $0 > output.html

- Or

- 3b) Setup as a CGI on your web server

- Or

- 3c) Setup as a crontab entry which will automatically email the results

#- $Id: lh_profile_monitor.cgi,v 1.5 2009/12/16 14:32:55 jdfsdp Exp jdfsdp $

#- #####################################################

use strict;

use LWP::Simple;

use Getopt::Long;

- #####################################################

sub life_hacker_data();

- #####################################################

my $profile_name=“Your-LH-Profile-name-Here”;

my $doHelp = 0;

my $download = 1;

my $profile_page=“http://lifehacker.com/people/$profile_name/”;- my $profile_page=“http://lifehacker.com/people/$profile_name/feed.xml”;

my $date=`date +%r`;

my $ret=GetOptions (“help|?” => \$doHelp,

“profile=s” => \$profile_name,

“download=i” => \$download);my $html_header = “Content-type: text/html\n\n

";

”refresh\" CONTENT=\“600\”>

$profile_name-Recent LifeHacker

my $html_footer = “”;

- ########################################################

sub Usage()

{

print “\nUsage:

$0 [-download 0/1] -profile LifeHacker_Profile\n”;

exit 1;

}

- #####################################################

- main()

- The output is a filtered html file to stdout

#- Grab the new page

Usage() if ( $doHelp );

- `/usr/local/bin/curl $profile_page > $profile_tmp_file` if ($download);

my $content = get( $profile_page ) if ($download);

my @lh_content = split(/\n/, $content);

print “$html_header\n”;

print “List of Recent Replied to Messages

Pulled from ”$profile_page\“>$profile_page at $date

- ”;

my $ret=life_hacker_data();

print “$ret\n”;

print “\n$html_footer\n\n”;

- #####################################################

sub life_hacker_data()

{- Display the total count of

- Friends

- Followers

- Msgs with replies

my $ret="";

foreach (@lh_content){

if (m#/friends/$profile_name|/followers/$profile_name|replied# ){

$ret .= $_;

}

}

$ret =~ s#

$ret =~ s#href=“/friends#href=”http://lifehacker.com/friends#g;

$ret =~ s#href=“/followers#href=”http://lifehacker.com/followers#g;

$ret =~ s#

#

#i;

$ret =~ s#^[.]Click here to view all[.]$##ig; # Remove excess lines

$ret =~ s#view all##ig; # Remove excess lines

$ret =~ s#»##ig; # Remove excess lines

return $ret;

}